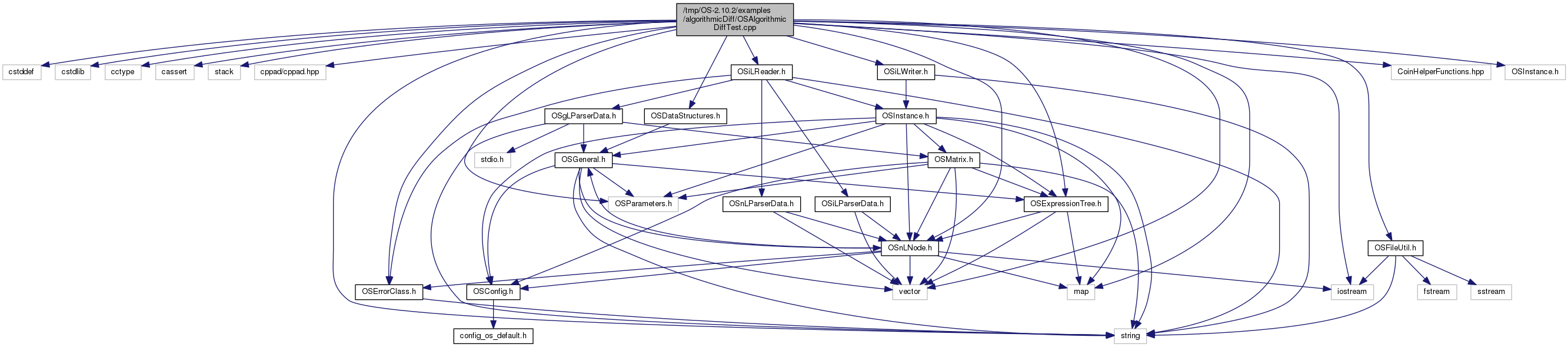

#include <cstddef>#include <cstdlib>#include <cctype>#include <cassert>#include <stack>#include <cppad/cppad.hpp>#include <iostream>#include "CoinHelperFunctions.hpp"#include "OSInstance.h"#include "OSiLWriter.h"#include "OSParameters.h"#include "OSnLNode.h"#include "OSErrorClass.h"#include "OSFileUtil.h"#include "OSiLReader.h"#include "OSExpressionTree.h"#include "OSDataStructures.h"#include <vector>#include <map>#include <string>

Go to the source code of this file.

Functions | |

| int | main () |

| bool | CheckFunctionValues (double *conVals, double objValue, double x0, double x1, double x2, double x3, double z0, double z1, double w) |

| bool | CheckGradientValues (SparseJacobianMatrix *sparseJac, double *objGrad, double x0, double x1, double x2, double x3, double y0, double y1, double w) |

| bool | CheckHessianUpper (SparseHessianMatrix *sparseHessian, double x0, double x1, double x2, double x3, double z0, double z1, double w) |

Detailed Description

- Remarks

- Copyright (C) 2005-2011, Robert Fourer, Horand Gassmann, Jun Ma, Kipp Martin, Northwestern University, Dalhousie University and the University of Chicago. All Rights Reserved. This software is licensed under the Eclipse Public License. Please see the accompanying LICENSE file in root directory for terms.

This example illustrates the algorithmic differentiation features of the OS library

The test problem CppADTestLag.osil:

min x0^2 + 9*x1 – w[0]

s.t.

33 - 105 + 1.37*x1 + 2*x3 + 5*x1 <= 10 – y[0]

ln(x0*x3) + 7*x2 >= 10 – y[1]

Note: in the first constraint 33 is a constant term and 105

is part of the nl node

the Jacobian is:

2*x0 9 0 0

0 6.37 0 2

1/x0 0 7 1/x3

now set x0 = 1, x1 = 5, x2 = 10, x3 = 5

the Jacobian is

2 9 0 0

0 6.37 0 2

1 0 7 .2

Now form a Lagrangian with multipliers of w on the objective z0 the multiplier on the first constraint and z1 on the second the Lagrangian is then:

L = w*(x0^2 + 9*x1) + z0*(1 + 1.37*x1 + 2*x3 + 5*x1) + z1*(log(x0*x3) + 7*x2)

the partial with respect to x0

L_0 = 2 * w * x0 + z1 / x0

the partial with respect to x1

L_1 = w * 9 + z0*1.37 + z0*5

the partial with respect to x2

L_2 = z1*7

the partial with respect to x3

L_3 = z0*2 + z1/x3

in the Hessian there are only two nonzero terms however the code will think there may be three since it does not know log(x0*x3) = log(x0) + log(x3)

L_00 = 2 * w - z1 / ( x0 * x0 )

L_33 = - z1 / (x3 * x3)

IMPORTANT: the forwardAD and reverseAD calls ONLY apply to the nonlinear part of the problem. The 9*x1 term in the objective is not part of the AD calculation nor are any terms in <linearConstraintCoefficients> that DO NOT appear in any nl nodes, for example the 7*x2 term in constraint with index 1. Note also, that there are only three variables that appear in nl nodes, x2 does not appear in an nl node

Definition in file OSAlgorithmicDiffTest.cpp.

Function Documentation

| int main | ( | ) |

create function with domain the variables and range objective function plus constraint values IMPORTANT: the forwardAD and reverseAD calls ONLY apply to the nonlinear part of the problem. The 9*x1 term in the objective is not part of the AD calculation nor are any terms in <linearConstraintCoefficients> that DO NOT appear in any nl nodes, for example the 7*x2 term in constraint with index 1. Note also, that there are only three variables that appear in nl nodes; x2 does not

get an index map of the nonlinear variables and see which variable are in <nonlinearExpressions> element

iterate through and get an index of all variables that are in <nonlinearExpressions> element

Now start to calculate function values and derivatives there are three nonlinear variables and we put their values into the vector x0

now use forward to get the mixed second partials with respect to variable x0 and x3 of every function in the range space

Since we want the mixed partials of x0 and x3 we take the sum of the appropriate unit vectors and do a forward order 1 again

function values cannot be calculated with the reverse sweep, in order to do a reverse sweep, a forward sweep with at least p = 0 must be complete

in the example below we get the third row of the Jacobian using a reverse sweep.

for everything below to work out we must go back and do a forward sweep with respect to third unit vector, i.e. we do a forwardAD(1, e^{3})

reverseAD(2, vlambda) will produce a vector of size 2*n, the terms 0, 2, 4, 2*n - 2 will be the first partials of the Lagrangian formed using vlambda the terms 1, 3, ..., 2*n - 1 will be column (row) row i of the Hessian of Lagrangian assuming we did a forwardAD(1, e^{i}) where e^{i} is the i'th unit vector

get the third row (column) of the Lagrangian of the Hessian matrix, it the third row because we did a forwardAD(1, e^{3})

most solver APIs work with pointers and not vectors so the OS API works with pointer here the values of the primal and Lagrange multipliers that we use

first we show different calls to get constraint and objective function values, many of this function calls are overloaded, for example, to evaluate all of the constraints at the current value of x use

calculateAllConstraintFunctionValues(double* x, double *objLambda, double *conLambda, bool new_x, int highestOrder)

if you want the functions values calculated using the algorithmic differentiation package. This may be desirable if you are also going to calculate derivatives at the same time. However, use

calculateAllConstraintFunctionValues(double* x, bool new_x)

if you are going to do a lot of function evaluations without derivative calculations, this way you do not need to use operator overloading

Similar remarks apply to the objective functions

if you want to get a function value by index here that signature

double calculateFunctionValue(int idx, double* x, bool new_x);

for example, use calculateFunctionValue(-1, x, true) to get the first objective function value

Most solvers that provide call backs want sparsity pattern. Jacobian and Hessain sparsity patters are provided by the OSInstance API

Most solvers that provide call backs want Jacobian calculation. Constraint Jacobians and objective function gradients are provided OSInstance API the basic signature for these functions is

calculateAllConstraintFunctionGradients(double* x, double *objLambda, double *conLambda, bool new_x, int highestOrder)

If we have already calculated function values using the AD routine, then highestOrder = 0, if we calculated function values using the OS expression tree then highestOrder = -1. Then if new_x = false and highestOrder = 0 and we make a gradient calcuation, then forwardAD(0, x) WILL NOT be called and there is a savings

Some solvers that provide call backs want the Hessian of the Lagrangian function. This is provided by the OSInstance API

Since we have already made the gradient calcualations we set new_x = false.

If the solver knows that at an iteration all of the derivative information is required then calculating the function values, Jacobian, and Hessian at the same time is the most efficient thing to do. This is by setting highestOrder = 2. Regardless of whether the call is to calculate function values for object or constraint, Jacobians, or even Hessian, setting highestOrder = 2 results in all derivative being calculated

If we just wanted objective function values we could have used

osinstance->calculateAllObjectiveFunctionValues( x, w, z, true, 0)

--OR --

calculateAllObjectiveFunctionValues(double* x, bool new_x)

however since we make a call with highestOrder = 2 all derivatives are calculated and no other calls are necessary

Since we called calculateAllObjectiveFunctionValues() with highestOrder = 2 the first derivative information is available

we can also calculate the Hessian of any row or objective function the function signature is SparseHessianMatrix calculateHessian( double x, int idx, bool new_x); Below we get the Hessian for row 1 , Note we set new_x to true since the Lagrange multipliers changed

Definition at line 102 of file OSAlgorithmicDiffTest.cpp.

| bool CheckFunctionValues | ( | double * | conVals, |

| double | objValue, | ||

| double | x0, | ||

| double | x1, | ||

| double | x2, | ||

| double | x3, | ||

| double | z0, | ||

| double | z1, | ||

| double | w | ||

| ) |

Definition at line 753 of file OSAlgorithmicDiffTest.cpp.

| bool CheckGradientValues | ( | SparseJacobianMatrix * | sparseJac, |

| double * | objGrad, | ||

| double | x0, | ||

| double | x1, | ||

| double | x2, | ||

| double | x3, | ||

| double | y0, | ||

| double | y1, | ||

| double | w | ||

| ) |

Definition at line 776 of file OSAlgorithmicDiffTest.cpp.

| bool CheckHessianUpper | ( | SparseHessianMatrix * | sparseHessian, |

| double | x0, | ||

| double | x1, | ||

| double | x2, | ||

| double | x3, | ||

| double | z0, | ||

| double | z1, | ||

| double | w | ||

| ) |

Definition at line 811 of file OSAlgorithmicDiffTest.cpp.

1.8.5

1.8.5